-

Product Management

Software Testing

Technology Consulting

-

Multi-Vendor Marketplace

Online StoreCreate an online store with unique design and features at minimal cost using our MarketAge solutionCustom MarketplaceGet a unique, scalable, and cost-effective online marketplace with minimum time to marketTelemedicine SoftwareGet a cost-efficient, HIPAA-compliant telemedicine solution tailored to your facility's requirementsChat AppGet a customizable chat solution to connect users across multiple apps and platformsCustom Booking SystemImprove your business operations and expand to new markets with our appointment booking solutionVideo ConferencingAdjust our video conferencing solution for your business needsFor EnterpriseScale, automate, and improve business processes in your enterprise with our custom software solutionsFor StartupsTurn your startup ideas into viable, value-driven, and commercially successful software solutions -

-

- Case Studies

- Blog

Advantages of Using Docker for Microservices

The development of server-side web applications has changed greatly since Docker’s debut. Thanks to Docker, it’s now easier to construct scalable and manageable applications built of microservices. To help you understand what microservices are and how Docker helps implement them, let’s start with a plausible example.

Imagine you have a certain John Doe on your web development team who uses a Mac. Jane Doe, a co-worker of John’s, works on Windows. Finally, yet another Doe – Jason Doe, the third member of your team – has decided that he works best on Debian. These three (surprisingly unrelated) developers use three different environments to develop the very same app, and each environment requires its own unique setup. Each developer consults 20 pages of instructions on installing various libraries and programming languages and gets things up and running. Still, it’s almost inevitable that libraries and languages will conflict across these three different development environments. Add in three more environments – staging, testing, and production servers – and you start to get an idea of how difficult it is to assure uniformity across development, testing, and production environments.

What do microservices and Docker have to do with the situation above?

The problem we’ve just described is relevant when you’re building monolithic applications. And it will get much worse if you decide to go with the modern trend and develop a microservices-based application. Since microservices are self-contained, independent application units that each fulfill only one specific business function, they can be considered small applications in their own right. What will happen if you create a dozen microservices for your app? And what if you decide to build several microservices with different technology stacks? Your team will soon be in trouble as developers have to manage even more environments than they would with a traditional monolithic application.

There’s a solution, though: using microservices and containers to encapsulate each microservice. Docker helps you manage those containers.

Docker is simply a containerization tool that was initially built on top of Linux Containers to provide a simpler way to handle containerized applications. We’ll review Docker’s advantages and see how it can help us implement microservices.

Docker Benefits for Microservices in 2020

Containerization, as an alternative to virtualization, has always had the potential to change the way we build apps. Docker, as a containerization tool, is often compared to virtual machines.

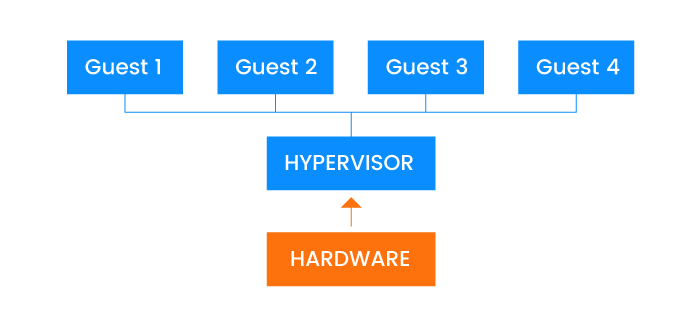

Virtual machines (VMs) were introduced to optimize the use of computing resources. You can run several VMs on a single server and deploy each application instance on a separate virtual machine. With this model, each VM provides a stable environment for a single application instance. Unfortunately, however, when we scale our application we’ll quickly encounter issues with performance, as VMs still consume a lot of resources.

The diagram above shows that hypervisor is used to run several operating systems on the same server. In the simplest terms, hypervisor helps reduce the resources required to run several operating systems.

Because microservices are similar to small apps, we must deploy microservices to their own VM instances to ensure discrete environments. And as you can imagine, dedicating an entire virtual machine to deploying only a small part of an app isn’t the most efficient option. With Docker, however, it’s possible to reduce performance overhead and deploy thousands of microservices on the same server since Docker containers require a lot fewer computing resources than virtual machines.

So far we’ve been talking about managing environments for a single app. But let’s assume you’re developing two different projects or you need to test two different versions of the same app. Conflicts between app versions or libraries (for projects) are inevitable in this case, and supporting two different environments on the same VM is a real pain.

How Docker Bests Virtual Machines

Contrary to how VMs work, with Docker we don’t need to constantly set up clean environments in the hopes of avoiding conflicts. With Docker, we know that there will be no conflicts. Docker guarantees that application microservices will run in their own environments that are completely separate from the operating system.

Thanks to Docker, there’s no need for each developer in a team to carefully follow 20 pages of operating system-specific instructions. Instead, one developer can create a stable environment with all the necessary libraries and languages and simply save this setup in the Docker Hub (we’ll talk more about the Hub later). Other developers then only need to load the setup to have the exact same environment. As you can imagine, Docker can save us a lot of time.

If you Google you’ll likely find other benefits of using Docker, such as rapid development speed and freedom of choice in terms of technology stacks. But we’d say that these other benefits that people talk about have nothing to do with Docker itself. It’s actually the microservices-based architecture that lets you rapidly develop new features and choose any technology stack you like for each microservice.

We can sum up Docker’s advantages as the following:

- Faster start time. A Docker container starts in a matter of seconds because a container is just an operating system process. A virtual machine with a complete OS can take minutes to load.

- Faster deployment. There’s no need to set up a new environment; with Docker, web development team members only need to download a Docker image to run it on a different server.

- Easier management and scaling of containers, as you can destroy and run containers faster than you can destroy and run virtual machines.

- Better usage of computing resources as you can run more containers than virtual machines on a single server.

- Support for various operating systems: you can get Docker for Windows, Mac, Debian, and other OSs.

Let’s now take a look at Docker’s architecture to find out how exactly it helps us develop microservices-based applications.

Docker’s Architecture

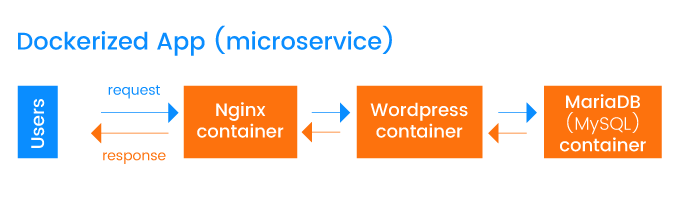

To better understand how Docker works and how to use Docker, we’ll consider a very simple microservice. There are many microservices architecture examples, but we’ve created our own for the purpose of this article:

The application (microservice) shown in the diagram consists of only three services and lets you implement a blog for your website. (All the code for this small app is located on GitHub, so you can check it out for yourself). Each service – Nginx (web server), MySQL (database), and Wordpress (blogging engine) – is encapsulated in a container.

The example above doesn’t cover the entire Docker architecture, though, as containers are only one part of it. The Docker architecture includes three chief components – images, containers, and registries. We’ll review each component one by one. But before you can actually use Docker for development, you’ll need to install Docker on your computer.

To make Docker containers work together, we must first register each of them in the docker-compose file, as Docker Compose coordinates all services.

| version: '3.9' | |

| services: | |

| nginx: | |

| build: ./nginx | |

| restart: always | |

| depends_on: | |

| - wordpress | |

| volumes: | |

| - demo-wordpress:/var/www/html | |

| ports: | |

| - 80:80 | |

| wordpress: | |

| image: wordpress:php7.1-fpm | |

| environment: | |

| WORDPRESS_DB_HOST: mysql | |

| WORDPRESS_DB_PASSWORD: example | |

| depends_on: | |

| - mysql | |

| volumes: | |

| - demo-wordpress:/var/www/html | |

| mysql: | |

| image: mariadb | |

| environment: | |

| MYSQL_ROOT_PASSWORD: example | |

| volumes: | |

| - demo-db:/var/lib/mysql | |

| volumes: | |

| demo-db: | |

| demo-wordpress: |

Let’s clarify what’s going on in docker-compose.yml.

We must pay attention to three elements of this file. First, we must specify the app services – “nginx,” “wordpress,” and “mysql.” Second, we must indicate images – note the “image” attribute under services. Lastly, we must specify one more attribute – “volumes.”

Docker Images

Docker containers aren’t created out of thin air. They’re instantiated from Docker images, which serve as blueprints for containers and are the second component in the Docker architecture. To run a Docker image, we have to use Dockerfiles.

Dockerfiles are just text files that explain how an image should be created. Remember how in docker-compose we didn’t specify an image for Nginx? We didn’t want to simply use a ready-made Nginx image, so we wrote “build.” By doing this, we told Docker to build the Nginx image and apply our own configurations. And to instruct Docker about what image must be used with what configurations, we use Dockerfiles.

Here’s an example of the Nginx Dockerfile in our small application:

| FROM nginx:stable-alpine | |

| COPY site.conf /etc/nginx/conf.d/default.conf |

As you can see, we gave only two instructions to Docker. The first line specifies the base image to create containers from. In our example, the base image for the Nginx container is “nginx:stable-alpine.” It’s possible to create images with very specific versions of libraries or languages. The second line tells Docker where to look for configurations. Usually, we place such files in the same directory where a Dockerfile is stored.

Here’s an important detail: Docker images never change once pushed to a registry. We can only pull an image from the Docker Hub, change it, and then push back a new version. Every new container will be instantiated from the same base image we’ve specified in docker-compose.yml or the Dockerfile. Because Docker conceals the container environment from the operating system, you can use a specific version of a library or programming language that will never conflict with the system version of the same library/language on your computer.

Docker Volumes

In the docker-compose file, each service also has an attribute called “volumes.” Volumes in Docker are the way we can handle persistent data that’s used by containers. Different containers can access the same volumes. In the docker-compose file, for the Nginx service we used the attribute “volumes,” which tells the Nginx container that it must look in the WordPress volumes.

In the event that several containers are located on different servers but still need access to the same data, they can share volumes. This is why we should use the “volumes” attribute.

Image Registries (Repositories)

Until now we’ve only mentioned images and containers – two basic components of Docker’s architecture. But you might be wondering where those images are located. All containers in our example app are built from standard images stored at Docker Hub. Here are links to the Nginx, Wordpress, and MySQL base images.

Registries are another component of the Docker ecosystem, and the Docker Hub is a great example of a registry. Registries are the places (repositories) where all images are stored. We can push and pull images to and from a registry; build our own unique registry for a particular project; and use images from registries to build our own base images.

At this point, we’ve talked about all three basic components – containers, images, and image repositories. The Docker architecture, however, includes other important components – namespaces, control groups, and Union filesystems (UnionFS). Namespaces let us separate containers from one another so they can’t access each other’s states; control groups are necessary to manage hardware resources among containers; and UnionFS helps to create building blocks for containers.

We should mention, however, that these additional components weren’t actually created by Docker: they were available before Docker in Linux Containers. Docker just uses the same concepts for container management.

We’ve introduced a lot of new information so far, so let’s boil it down to the following key points:

- Dockerfiles contain important instructions to work with Docker images, which are used for constructing containers.

- Each app microservice must have a separate Dockerfile with specific instructions for each image.

- Docker containers are always created from the specified Docker images (this is how Docker ensures consistency across environments).

- You can use pre-built Docker images that are stored in public registries such as Docker Hub, and can change these base images via configurations to adapt them for your applications.

- You need to register all application microservices in the docker-compose file.

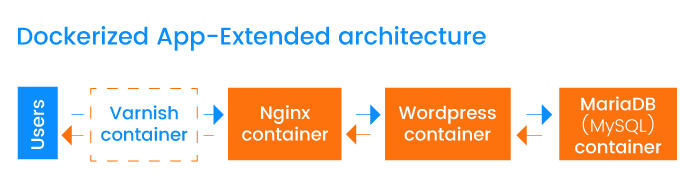

Extending the Architecture of a Microservices-Based App with Docker

The initial application architecture we provided in our example can easily be extended with other services. Let’s say we wanted to cache requests to our app. Let’s add a service called Varnish. Varnish will let us cache HTML pages, images, CSS, and JavaScript files that are often requested by users. Our updated microservice-based app will look like this:

The code below shows an extended Docker Compose file with a Varnish service:

| version: '3.9' | |

| services: | |

| varnish: | |

| build: ./varnish | |

| ports: | |

| - 80:80 | |

| depends_on: | |

| - nginx | |

| nginx: | |

| build: ./nginx | |

| restart: always | |

| depends_on: | |

| - wordpress | |

| volumes: | |

| - demo-wordpress:/var/www/html | |

| wordpress: | |

| image: wordpress:php7.1-fpm | |

| environment: | |

| WORDPRESS_DB_HOST: mysql | |

| WORDPRESS_DB_PASSWORD: example | |

| depends_on: | |

| - mysql | |

| volumes: | |

| - demo-wordpress:/var/www/html | |

| mysql: | |

| image: mariadb | |

| environment: | |

| MYSQL_ROOT_PASSWORD: example | |

| volumes: | |

| - demo-db:/var/lib/mysql | |

| volumes: | |

| demo-db: | |

| demo-wordpress: |

We need to include one more service in the docker-compose.yml file, configure it, and specify ports with volumes through which we can connect to the app. If we decide not to use Varnish, we can simply remove it from docker-compose and update our configurations. Again, you can follow along with the simple WordPress container project on GitHub to see the updated parts of the app for yourself.

That’s nearly all you need to know to manage containers with Docker. There’s one thing that might still be bugging you, though: How can we manage hundreds or thousands of Docker containers across multiple servers? The final section will help you answer this question.

Managing Docker-based Apps with Container Orchestration Systems

Docker lets us deploy microservices one by one on a single host (server). A small app (like our example app) with less than a dozen services doesn’t need any complex management. But it’s best to be ready for when your app grows. If you run several servers, how can you deploy a number of containers across all of them? How can you scale those servers up and down? Docker’s ecosystem includes Container Orchestration Systems to address these problems.

A Container Orchestration System is an additional tool you should use with Docker. Until the middle of 2016, Docker didn’t actually provide any specific way to manage applications built of thousands of microservices. But now there’s Docker Swarm, a built-in framework for orchestrating containers.

Docker now comes with a special mode – swarm mode – that you can use to manage clusters of containers. Docker Swarm lets you use the Docker CLI to run swarm commands, so you can easily initialize groups of containers and add and remove containers from those groups. Besides Docker Swarm, there are several other container orchestration managers you could consider as well:

- Kubernetes, a containers cluster manager. You can run Kubernetes on your own servers or in the cloud.

- DC/OS, a special project that gives you an advanced graphical user interface to manage Docker containers.

- Nomad Project, software that can work with Docker to help you deploy and manage your applications on Amazon ECS, DigitalOcean, the Azure Container Service, or the Google Cloud Platform.

If you’re interested in cloud solutions that can help you run Dockerized applications and, more importantly, orchestrate containers, you should consider the following:

- Google Cloud Platform, with support for Kubernetes. There’s also a cloud manager called Google Container Engine that is based on Kubernetes.

- Amazon Web Services. AWS allows you to use the Elastic Compute Cloud (EC2) service to run and process Docker containers. As an option, you can use Elastic Kubernetes Service to work with Kubernetes on AWS.

- Azure Container Service is a hosting solution similar to Amazon ECS, and supports various frameworks for orchestrating dockerized applications including Kubernetes, DC/OS with Mesos, and Docker Swarm.

Now all three platforms allow you both – to simply deploy containers with applications and to support your work with containers via Kubernetes.

Using microservices and containers is considered the proper modern way to build scalable and manageable web applications. If you don’t containerize microservices, you’ll face a lot of difficulties when deploying and managing them. That’s why we use Docker: to avoid any troubles when deploying microservices. Add in a Container Orchestration System, and you’ll be able to handle your dockerized applications with no limits.