-

Product Management

Software Testing

Technology Consulting

-

Multi-Vendor Marketplace

Online StoreCreate an online store with unique design and features at minimal cost using our MarketAge solutionCustom MarketplaceGet a unique, scalable, and cost-effective online marketplace with minimum time to marketTelemedicine SoftwareGet a cost-efficient, HIPAA-compliant telemedicine solution tailored to your facility's requirementsChat AppGet a customizable chat solution to connect users across multiple apps and platformsCustom Booking SystemImprove your business operations and expand to new markets with our appointment booking solutionVideo ConferencingAdjust our video conferencing solution for your business needsFor EnterpriseScale, automate, and improve business processes in your enterprise with our custom software solutionsFor StartupsTurn your startup ideas into viable, value-driven, and commercially successful software solutions -

-

- Case Studies

- Blog

How to Make an Augmented Reality Mobile App for iOS 11

On September 12, 2017, Apple held its first keynote event at the Steve Jobs Theater. The company announced several new devices including the cutting-edge iPhone X and iPhone 8.

That was on the hardware side, but there was a headliner on the software side as well, by which we mean the brand-new iOS 11.

What’s so special about this mobile operating system? Actually, a lot. But we’d like to highlight one of the core features of iOS 11 – support for augmented reality applications created with ARKit, Apple’s new framework for creating augmented reality (AR) experiences.

Though AR has already become a buzzword, the technology is still in its early days and many software developers don’t have a full understanding of how to create augmented reality mobile applications.

We’ve decided to shed light on this issue and guide you on your way to creating awesome augmented reality mobile apps for iOS 11.

Advantages of Apple’s ARKit SDK

Experienced mobile developers might ask why we chose ARKit for building augmented reality mobile apps over other AR development kits. First of all, ARKit is Apple’s own AR SDK, so it’s perfectly tailored to iOS 11. Secondly, the ARKit SDK offers a number of advantages that help it stand out from other similar development kits.

Let’s briefly go over the biggest advantages of the ARKit SDK.

- ARKit is supported on all Apple devices that run iOS 11 and that have A11 (the newest), A10, or A9 chips. This means there’s already a huge potential market for augmented reality applications created with ARKit, as millions of owners of the following Apple devices can use such apps:

- ARKit uses so-called Visual Inertial Odometry (VIO) for tracking the environment and placing virtual objects with great accuracy and without any calibration. VIO uses several sensors to track where the device is: the camera, accelerometer, and gyroscope. In fact, VIO can be considered a form of the SLAM systems used by some other AR SDKs.

- Apple’s augmented reality SDK boasts advanced scene analysis capabilities. It can analyze scenes and detect horizontal planes to place virtual objects on and, moreover, ARKit can estimate the amount of light in each scene and adjust the lighting of virtual objects accordingly.

- In combination with the iPhone X, ARKit enables revolutionary face tracking capabilities. The sensors on the iPhone X can detect the expression on a user’s face, which means it’s possible to apply this expression to virtual objects (for example, animated emojis).

- ARKit’s performance can be optimized by popular third-party tools such as Unity and Unreal Engine. These tools allow developers to create compelling virtual objects with advanced graphics.

| iPhone | iPad |

| iPhone X | 12.9" iPad Pro (2nd generation) |

| iPhone 8 Plus | 10.5" iPad Pro (2nd generation) |

| iPhone 8 | iPad (5th generation) |

| iPhone 7 Plus | |

| iPhone 7 | |

| iPhone 6s Plus | |

| iPhone 6s | |

| iPhone SE |

As you can see, ARKit is a truly powerful and technologically advanced tool that allows developers to create fantastic augmented reality experiences.

Getting Started with the ARKit SDK

It’s time to start making an augmented reality mobile application with ARKit. Before getting to work you need the appropriate tools, so let’s go over the toolkit we used to create our sample AR mobile app.

We decided to build a native iOS application without using any third-party solutions, so we needed only two pieces of software:

- Xcode 9. The latest version of the integrated development environment for creating applications for all Apple platforms. Xcode 9 contains ARKit for building AR apps.

- iOS 11. Make sure to use an Apple device with the latest version of iOS; otherwise, ARKit won’t work. iOS 11 is available now (and owners of supported devices can update to iOS 11 anytime), but it hadn’t been officially released when we started building our AR mobile app, so we used the beta version.

Before you start building an augmented reality mobile app with ARKit, you need to understand what it consists of:

- SCNScene. A hierarchy of nodes that form a displayable 3D scene. All attributes such as geometries, cameras, and lights are attached to SCNScene.

- 3D object. A virtual object displayed on the screen in an augmented reality application. Any 3D object can be added to SCNScene, so you can use whatever 3D object you like.

Step-by-Step Guide to Building an Augmented Reality Mobile App for iOS 11

We decided to make a sample augmented reality mobile application featuring a quadcopter that users can move in all directions. Of course, you can try building a similar AR app featuring a different 3D object – it’s all in your hands.

Step #1 Creating a Project

This is probably the simplest step in developing an augmented reality app with ARKit. To start your project, launch Xcode 9 and create a new Single View Application in the iOS section.

Step #2 Adding ARKit SceneKit View

Now you need to add ARKit SceneKit View to your Storyboard. ARSCNView is used for displaying virtual 3D content on a camera background. Don’t forget to adjust the constraints so that ARSCNView works on the full screen of the device.

Finally, create an IBOutlet in the ViewController.

Step #3 Enabling the Camera

ARKit is a session-based framework, so each session has a scene that renders virtual objects in the real world. To enable rendering, ARKit needs to use an iOS device’s sensors (camera, accelerometer, and gyroscope).

All virtual objects in ARKit are, therefore, rendered relative to their position and orientation in relation to the camera. This means that an AR mobile application needs to use the camera. To allow it to do so, set up the scene in the viewDidLoad method and run the session in the viewWillAppear method:

When users launch an augmented reality mobile application, they must allow the app to use the camera. To request access to the camera from a user, you need to add a new row to the Info.plist file: Privacy – Camera Usage Description.

If you skip this step, the application will respond with an error as it won’t have permission to use the camera. You can edit the text displayed in the camera user permission request.

To check that you’ve done everything right, run your AR app; if you see the camera working, then you’re on the right track.

Step #4 Adding a 3D Object

It’s time to finally add a 3D object to our augmented reality mobile application. We’ve already mentioned that you can pick any virtual object you want, but let’s check what file formats are supported by ARKit.

ARKit SceneKit View supports several file formats, namely .dae (digital asset exchange), .abc (alembic), and .scn (SceneKit archive). When .dae or .abc files are added to an Xcode project, however, the editor automatically converts them to SceneKit’s compressed files that retain the same extensions. To serialize an SCNScene object, create a .scn file by converting the initial .dae or .abc file.

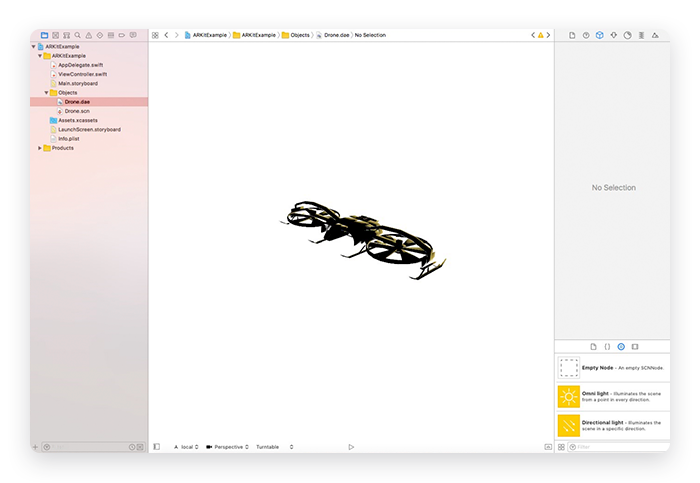

For this sample AR mobile app for iOS, we downloaded a nice 3D quadcopter in the .dae format. To add it to your application, create an Objects folder in Xcode and place the file in it. The file is still in .dae format, so use the Editor Convert tool to transform it into a .scn file. Keep in mind that the initial .dae file mustn’t be replaced.

As you can see, both .dae and .scn files are present in the Objects folder:

Step #5 Displaying a 3D Object in the Scene

The 3D object has already been added to our project, but it’s just a visual object kept in a folder. It’s time to have it displayed in the application, which means we need to add the object to the SCNScene.

Since we’re using SceneKit, a 3D object model must be a subclass of SCNNode, so we need to create a new class (we’ve called it Drone, though you may call it whatever you like) and load the initial file containing the object (in our case, Drone.scn). Here’s the code we used to add the quadcopter to the scene:

Having configured this SCNNode, we must initialize an object of the Drone class and add it after the setup configuration:

If we run our augmented reality mobile app now, we’ll see a quadcopter in its default position on our iPhone’s screen. Needless to say, if you can’t see a 3D object, go back and check whether you’ve done everything correctly. In our sample app, the quadcopter in the default position looked like this:

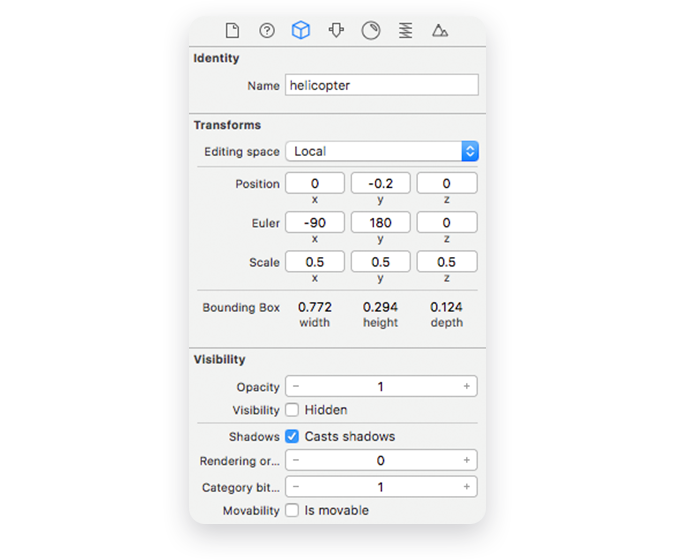

The default position of the 3D object looks unnatural, so we decided to change it. For the design of this quadcopter, we experimented a lot till we chose the following parameters:

This is what our quadcopter looked like after we set these parameters:

If you go for a different 3D object, feel free to experiment with its position.

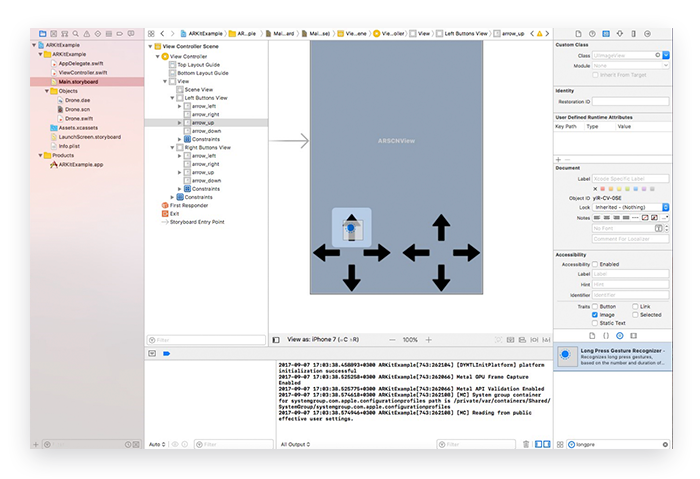

Step #6 Setting Up Control Buttons

The application seems to be working and the quadcopter is displayed in the scene, but there’s still one major thing to do. So far, the quadcopter is a motionless AR object that looks nice but doesn’t move. It’s time to add controls.

It goes without saying that you can pick any design for your controls. For this sample augmented reality mobile application, we chose pointers. Once you’ve settled on your control buttons, move the initial files to the Assets folder and add them to ARSCNView.

It’s convenient to organize all control buttons in two similar sets and place them in the lower left-hand and lower right-hand corners of the screen. One set of controls is responsible for moving the quadcopter up and down and left and right, while the other rotates it and moves the quadcopter forward and backward. To set up the controls, you need to use SCNAction and two methods.

The moveBy(x:y:z:duration:) method is in charge of creating the action that moves the node relative to its current position.

The parameters in this method are (as per Apple):

- x – the distance to move the node in the X direction of its parent node’s local coordinate space

- y – the distance to move the node in the Y direction of its parent node’s local coordinate space

- z – the distance to move the node in the Z direction of its parent node’s local coordinate space

- duration – the duration of the animation (in seconds)

The rotateTo(x:y:z:duration:) method is responsible for creating an action that rotates the node in principal angles.

The parameters in this method are (as per Apple):

- x – for rotating the node counterclockwise around the X-axis of its local coordinate space (measured in radians)

- y – for rotating the node counterclockwise around the Y-axis of its local coordinate space (measured in radians)

- z – for rotating the node counterclockwise around the Z-axis of its local coordinate space (measured in radians)

- duration – the duration of the animation (in seconds)

Now that you know what methods you need to use, it’s time to start setting up the control buttons. You need the Long Press Gesture Recognizer to control the movements of the quadcopter. This tool allows users to move the 3D object as long as a control button is pressed, so when users release the button the quadcopter stops moving. It’s just like on your game console controller.

You can start from any control button you like; we started from the one responsible for moving the quadcopter up.

An important note: change the minimum duration of Long Press Gesture Recognizer to 0 seconds so that the object starts moving as soon as the control button is pressed.

Next, create an IBAction (we called it upLongPressed, though you can name it whatever you want) and, finally, add some code that makes the button work:

You also need to use an additional method that controls the execution of actions (it works for the action above as well as some other actions):

Run the application and check whether the button works. If it does (and we hope it does!), you’re almost done.

Just use the same tool, Long Press Gesture Recognizer, to set up the remaining three control buttons. Here’s the code we used to...

...move the 3D object down:

...move the 3D object left:

...move the 3D object right:

Combined with these actions, you need a couple of additional methods.

This method factors in the current rotation angle and calculates the coordinates for its correct movement:

The following method is used for configuring and executing the correct movement of the quadcopter (by setting concrete coordinates):

Now it’s time to set up the four remaining control buttons. As you’ve probably guessed, you need the Long Press Gesture Recognizer. Let’s start with the button responsible for rotating the quadcopter left.

We’ll need create an IBAction and add the following code:

To determine the rotation angle and execute the rotation, use the following additional method:

Setting up the button that rotates the 3D object in the right direction requires this code:

The additional method from the previous example works for this IBAction too.Now it’s time to set up the last two control buttons.

Move forward:

Move backward:

In the end you should test your AR mobile app, so run it and try to control the 3D object with all eight buttons. If all of them work as they’re supposed to, you’ve done everything right.

Check out a video of our AR application:

Ready to Build Augmented Reality Applications with ARKit?

Apple’s new augmented reality framework is a powerful tool for creating incredible experiences. In this article, we showed you how to create a fairly simple AR app. However, even this small task requires deep knowledge and a lot of skills.

If you face some difficulties making your own AR app, check out the full code we used in this repository on GitHub.